By no means Altering Deepseek Will Finally Destroy You

페이지 정보

작성자 Cecil Coppleson 작성일25-02-08 15:51 조회3회 댓글0건관련링크

본문

Chinese startup DeepSeek has constructed and launched DeepSeek-V2, a surprisingly highly effective language mannequin. You should perceive that Tesla is in a better place than the Chinese to take advantage of new techniques like those used by DeepSeek. If you got the GPT-4 weights, once more like Shawn Wang mentioned, the model was trained two years in the past. China incorrectly argue that the 2 targets outlined right here-intense competitors and strategic dialogue-are incompatible, though for various causes. Staying within the US versus taking a visit again to China and becoming a member of some startup that’s raised $500 million or no matter, finally ends up being one other factor where the highest engineers really find yourself desirous to spend their professional careers. We validate this strategy on high of two baseline fashions across completely different scales. The training process entails generating two distinct types of SFT samples for each occasion: the primary couples the issue with its authentic response in the format of , while the second incorporates a system prompt alongside the problem and the R1 response within the format of .

Chinese startup DeepSeek has constructed and launched DeepSeek-V2, a surprisingly highly effective language mannequin. You should perceive that Tesla is in a better place than the Chinese to take advantage of new techniques like those used by DeepSeek. If you got the GPT-4 weights, once more like Shawn Wang mentioned, the model was trained two years in the past. China incorrectly argue that the 2 targets outlined right here-intense competitors and strategic dialogue-are incompatible, though for various causes. Staying within the US versus taking a visit again to China and becoming a member of some startup that’s raised $500 million or no matter, finally ends up being one other factor where the highest engineers really find yourself desirous to spend their professional careers. We validate this strategy on high of two baseline fashions across completely different scales. The training process entails generating two distinct types of SFT samples for each occasion: the primary couples the issue with its authentic response in the format of , while the second incorporates a system prompt alongside the problem and the R1 response within the format of .

0.3 for the first 10T tokens, and to 0.1 for the remaining 4.8T tokens. On the small scale, we practice a baseline MoE mannequin comprising roughly 16B total parameters on 1.33T tokens. Through the pre-coaching stage, coaching DeepSeek-V3 on each trillion tokens requires solely 180K H800 GPU hours, i.e., 3.7 days on our cluster with 2048 H800 GPUs. Whereas, the GPU poors are usually pursuing more incremental modifications based on strategies which are known to work, that would enhance the state-of-the-art open-supply fashions a moderate amount. To receive new posts and support our work, consider becoming a free or paid subscriber. Jordan Schneider: Well, what is the rationale for a Mistral or a Meta to spend, I don’t know, 100 billion dollars training one thing and then just put it out without spending a dime? Jordan Schneider: Let’s talk about those labs and those fashions. Jordan Schneider: Is that directional information enough to get you most of the way there? The paper presents the CodeUpdateArena benchmark to check how properly large language fashions (LLMs) can replace their data about code APIs which can be constantly evolving.

0.3 for the first 10T tokens, and to 0.1 for the remaining 4.8T tokens. On the small scale, we practice a baseline MoE mannequin comprising roughly 16B total parameters on 1.33T tokens. Through the pre-coaching stage, coaching DeepSeek-V3 on each trillion tokens requires solely 180K H800 GPU hours, i.e., 3.7 days on our cluster with 2048 H800 GPUs. Whereas, the GPU poors are usually pursuing more incremental modifications based on strategies which are known to work, that would enhance the state-of-the-art open-supply fashions a moderate amount. To receive new posts and support our work, consider becoming a free or paid subscriber. Jordan Schneider: Well, what is the rationale for a Mistral or a Meta to spend, I don’t know, 100 billion dollars training one thing and then just put it out without spending a dime? Jordan Schneider: Let’s talk about those labs and those fashions. Jordan Schneider: Is that directional information enough to get you most of the way there? The paper presents the CodeUpdateArena benchmark to check how properly large language fashions (LLMs) can replace their data about code APIs which can be constantly evolving.

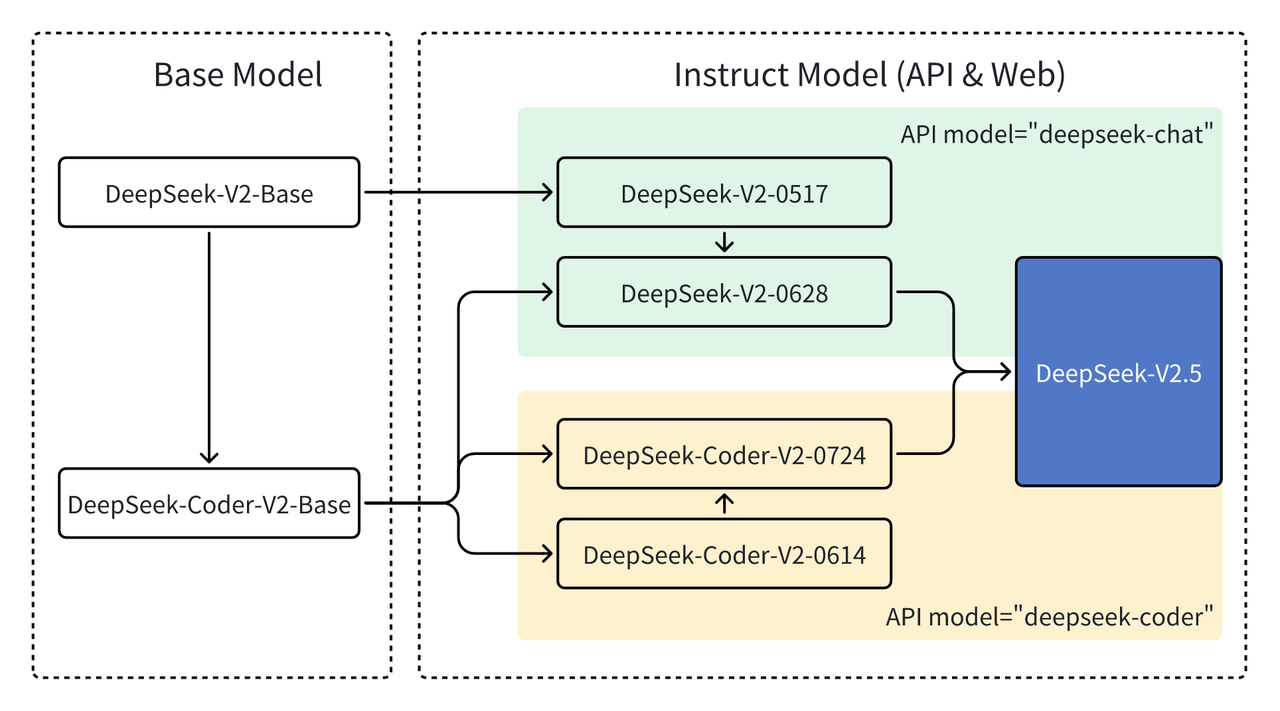

Those extremely giant fashions are going to be very proprietary and a group of onerous-gained experience to do with managing distributed GPU clusters. Now you don’t need to spend the $20 million of GPU compute to do it. Assuming the rental value of the H800 GPU is $2 per GPU hour, our total coaching prices amount to solely $5.576M. I’ve played round a fair amount with them and have come away just impressed with the efficiency. I want to return again to what makes OpenAI so particular. The open-source world, thus far, has more been about the "GPU poors." So in case you don’t have quite a lot of GPUs, but you continue to need to get business value from AI, how can you try this? 32014, versus its default value of 32021 within the deepseek-coder-instruct configuration. They found this to help with skilled balancing. Documentation on installing and utilizing vLLM will be found right here. The website and documentation is fairly self-explanatory, so I wont go into the main points of setting it up.

And one of our podcast’s early claims to fame was having George Hotz, where he leaked the GPT-four mixture of professional particulars. I still suppose they’re value having on this listing as a result of sheer number of fashions they've out there with no setup on your finish other than of the API. Where does the know-how and the expertise of really having labored on these models prior to now play into with the ability to unlock the advantages of no matter architectural innovation is coming down the pipeline or appears promising inside one in every of the main labs? I would consider all of them on par with the key US ones. If this Mistral playbook is what’s going on for some of the other firms as effectively, the perplexity ones. These models have been trained by Meta and by Mistral. So if you think about mixture of consultants, when you look at the Mistral MoE model, which is 8x7 billion parameters, heads, you want about 80 gigabytes of VRAM to run it, which is the largest H100 on the market. To reply this question, we have to make a distinction between services run by DeepSeek and the DeepSeek fashions themselves, which are open source, freely obtainable, and starting to be supplied by domestic providers.

If you loved this short article and you would love to receive details relating to شات ديب سيك assure visit our own web site.

댓글목록

등록된 댓글이 없습니다.