10 Ways Deepseek Will Aid you Get More Business

페이지 정보

작성자 Dwain Khull 작성일25-02-07 08:33 조회3회 댓글0건관련링크

본문

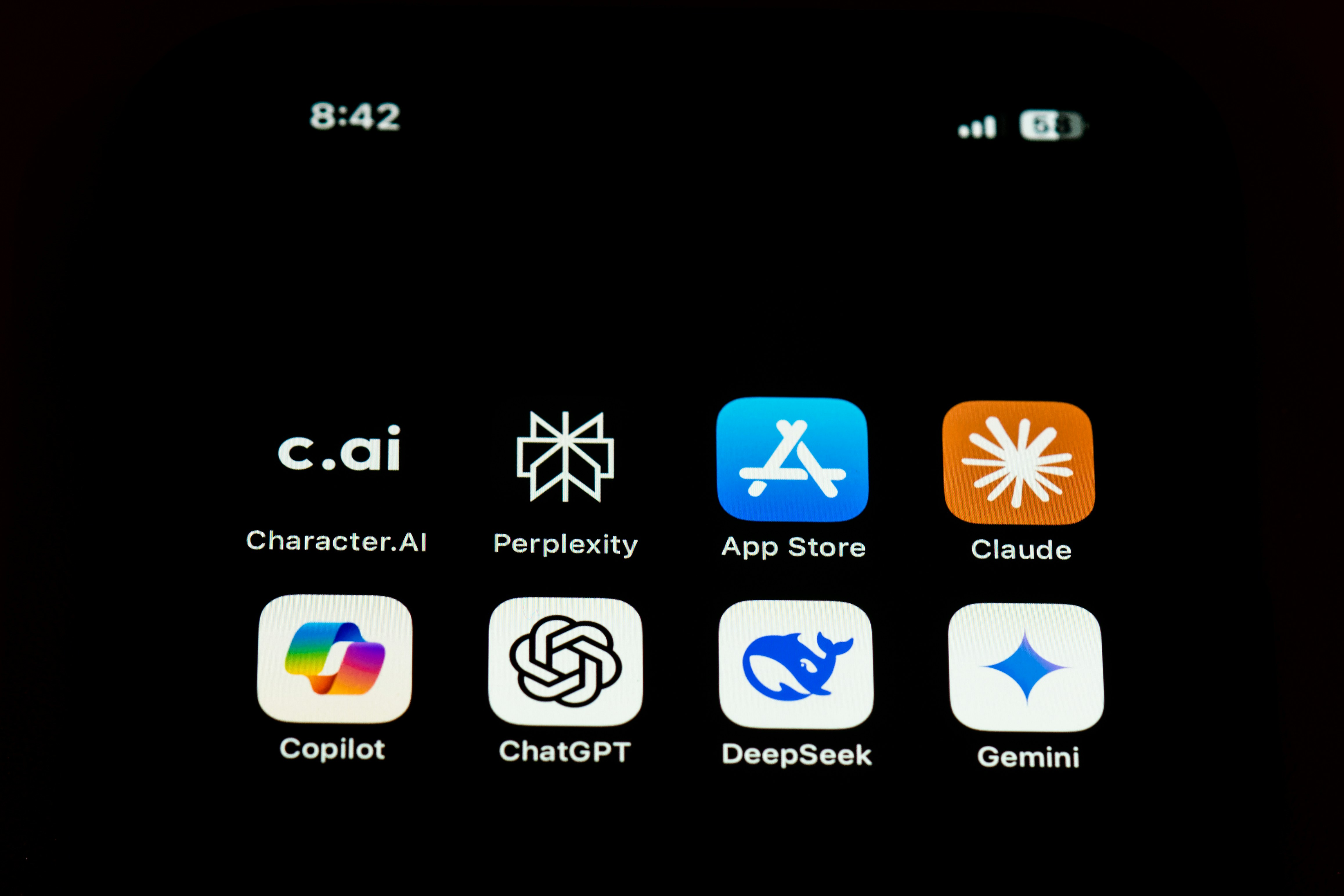

The corporate also claims it only spent $5.5 million to train DeepSeek V3, a fraction of the event price of fashions like OpenAI’s GPT-4. It nonetheless fails on duties like rely 'r' in strawberry. 5. Apply the same GRPO RL process as R1-Zero with rule-based mostly reward (for reasoning duties), but in addition model-based reward (for non-reasoning tasks, helpfulness, and harmlessness). DeepSeek’s pure language understanding allows it to course of and interpret multilingual information. DeepSeek Coder V2 is the results of an modern training course of that builds upon the success of its predecessors. It outperforms its predecessors in several benchmarks, including AlpacaEval 2.Zero (50.5 accuracy), ArenaHard (76.2 accuracy), and HumanEval Python (89 score). This permits for extra accuracy and recall in areas that require a longer context window, together with being an improved model of the previous Hermes and Llama line of models. The mannequin, DeepSeek V3, was developed by the AI agency DeepSeek and was launched on Wednesday underneath a permissive license that permits builders to download and modify it for many purposes, including industrial ones.

Chinese imports and regulatory measures, which could affect the adoption and integration of technologies like DeepSeek in U.S. The open-supply nature of DeepSeek-V2.5 might speed up innovation and democratize entry to superior AI applied sciences. DeepSeek-V2.5 was released on September 6, 2024, and is available on Hugging Face with each net and API entry. DeepSeek, the Chinese AI lab that recently upended trade assumptions about sector development costs, has released a brand new household of open-source multimodal AI fashions that reportedly outperform OpenAI's DALL-E three on key benchmarks. Breakthrough in open-supply AI: DeepSeek, a Chinese AI firm, has launched DeepSeek-V2.5, a powerful new open-supply language model that combines normal language processing and superior coding capabilities. However, its interior workings set it apart - particularly its mixture of specialists architecture and its use of reinforcement studying and tremendous-tuning - which enable the model to operate extra effectively as it works to supply constantly accurate and clear outputs. This information will use Docker to exhibit the setup. To run regionally, DeepSeek-V2.5 requires BF16 format setup with 80GB GPUs, with optimal performance achieved using eight GPUs. DeepSeek was capable of train the mannequin using a knowledge heart of Nvidia H800 GPUs in simply around two months - GPUs that Chinese corporations were lately restricted by the U.S.

In inner Chinese evaluations, DeepSeek-V2.5 surpassed GPT-4o mini and ChatGPT-4o-latest. Ethical concerns and limitations: While DeepSeek-V2.5 represents a significant technological development, it also raises important moral questions. An unoptimized model of DeepSeek V3 would wish a financial institution of high-end GPUs to reply questions at cheap speeds. DeepSeek (Chinese AI co) making it look simple in the present day with an open weights launch of a frontier-grade LLM skilled on a joke of a funds (2048 GPUs for two months, $6M). The Chinese startup's product has additionally triggered sector-large issues it could upend incumbents and knock the growth trajectory of main chip manufacturer Nvidia, which suffered the largest single-day market cap loss in history on Monday. This stage of transparency is a major draw for these involved concerning the "black box" nature of some AI models. Note that there is no immediate method to use traditional UIs to run it-Comfy, A1111, Focus, and Draw Things aren't appropriate with it proper now. As like Bedrock Marketpalce, you need to use the ApplyGuardrail API within the SageMaker JumpStart to decouple safeguards to your generative AI functions from the DeepSeek-R1 model. Is DeepSeek chat free to use?

In the event you loved this information and you want to receive details about شات ديب سيك i implore you to visit the web site.

댓글목록

등록된 댓글이 없습니다.